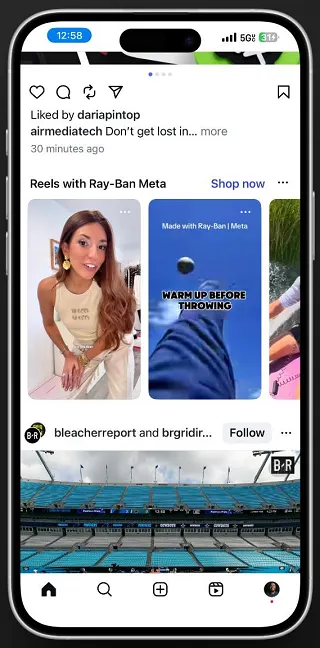

this week, meta began featuring reels captured using its ray-ban meta smart glasses within its apps. dedicated in-stream panels now highlight videos created through the device — as seen in an example shared by social media expert lindsey gamble.

it’s a smart and expected strategy.

in my 2026 predictions, i noted this as a major opportunity for meta:

“with more video content being captured through these devices, meta is building an expanding library to showcase. expect to see stories taken with glasses appear with unique colored rings or watermarks on video thumbnails, as meta effectively markets its devices through this material.”

while the current update doesn’t include those exact features, the idea is similar — giving content recorded through meta’s ai glasses special visibility. this not only promotes the product but also adds more video material to fuel engagement across its platforms.

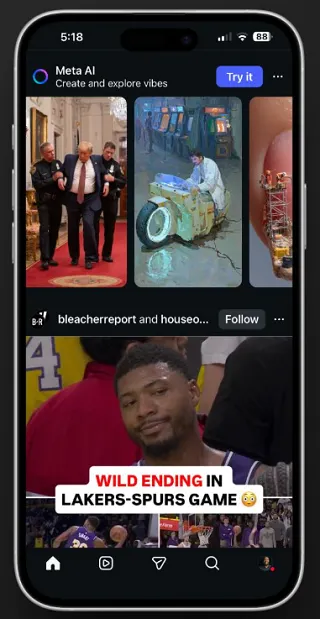

at the same time, meta is testing a similar in-feed section for clips created in vibes, its generative ai video feature.

that said, the value of ai-generated clips is questionable, especially for apps built on real human connection. still, since video remains the key driver of engagement across meta’s platforms, even ai-generated content helps maintain user attention and scrolling time.

the ai glasses initiative, however, offers real potential — showcasing the video capture quality and features of meta’s smart eyewear. as meta moves closer to introducing full ar glasses, this approach could help highlight new immersive experiences.

after all, every video captured through these glasses doubles as an ad for the product itself. so, it only makes sense for meta to put that content front and center — and for creators, it could even open up new opportunities for visibility and brand promotion.